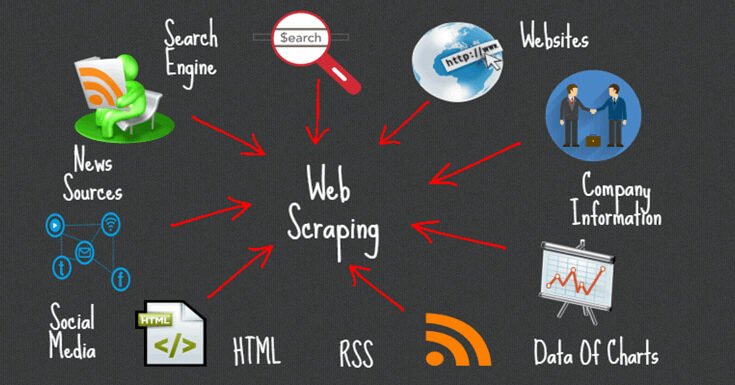

Web scraping is one of the most popular data extraction techniques today. It allows you to collect data from websites and store it in a structured format for later analysis. In this blog post, we will teach you everything you need to know about web scraping with Python. From installation instructions to basic concepts, we will cover everything you need to get started. The most common way to scrap websites is with Web Scraping API, which is a library for processing XML and HTML. The most common way to scrap websites is with Web Scraping API, which is a library for processing XML and HTML. So if you want to learn how to extract data from websites using Python, read on!

Hot Demanded Property Software in Whole Pakistan | How to Disable Updates on Windows 10 ?

What is web scraping?

Web scraping is the process of extracting data from websites by automating the manual retrieval of data using scripts. This data can then be used for a variety of purposes, such as analyzing trends or compiling statistics. There are a few different ways to do web scraping with Python, and the preferred method depends on the task at hand.

The most common way to scrap websites is with Web Scraping API, which is a library for processing XML and HTML. This library makes it easy to grab specific parts of a page, as well as search for specific strings. Other libraries you might want to consider include Selenium and WebDriver. Selenium is great for testing web pages, while WebDriver allows you to control the browser from Python code.

Once you have your desired information, there are a number of ways to store it. You can use simple dicts or NumPy arrays, or you can use specialized libraries like CSV-IO or Pandas. Whatever storage mechanism you choose, make sure that it’s easy to access and typesafe so that your scraping script can run without errors.

Different types of web scraping

There are a number of different types of web scraping techniques that can be used. Here we will discuss some of the most common ones.

1) Text scraping: This is the most basic form of web scraping and involves extracting data from websites by parsing text content.

2) XML scraping: XML scraping is a more advanced technique that uses XML documents as the source of data. It is useful for extracting data from websites that use custom markup formats or for extracting data that is buried inside complex document structures.

3) DOM scraping: DOM (Document Object Model) scraping is another more advanced form of web scraping that uses the structure and elements of a website’s HTML/XML pages to extract data. This can be useful for extracting user input data, blog posts, or any other information contained within a website’s pages.

4) spidering: Spidering is a technique where you manually visit each page on a website and collect the URLs contained within it. This can be useful for gathering details about specific pages on a website, such as the URL structure or list of links contained on them.

How to get started with web scraping with Python

If you want to start scraping the web for data, or just learn more about Python and its web scraping capabilities, this guide is for you. There are a few different ways you can get started with web scraping with Python, so we’ll walk you through each one.

The first way to get started is by using the Requests library. This library makes it easy to get data from websites by providing a wide variety of methods and objects for working with HTTP requests. We’ll show you how to use the Requests library to scrape the homepage of a website.

After learning how to use the Requests library, you can also explore other libraries that work with HTTP requests, such as web scraping api These libraries provide additional features and functionality that can be useful when extracting data from websites.

Finally, we’ll show you how to create your own web scrapers using Python and some basic coding principles. By doing this, you’ll gain a deeper understanding of how web scrapers work and be able to build your own custom tools for extracting data from websites.

Tips for conducting successful web scrapes

If you’re new to web scraping, don’t be discouraged! There are a few tips that will help make your scrapes more successful.

1. Start with easy targets. Don’t try to scrape the entirety of a website right off the bat – start small by targeting specific pages or sections. This will help you get comfortable with the process and learn what sorts of information you can extract from a given site.

2. Use libraries and frameworks. There are plenty of Python libraries and frameworks designed for web scraping, so it’s worth checking out some of them if you’re new to the process. These tools can make your life much easier by providing common functions and structures for working with web pages.

3. Be patient and persistent. It can take some time to get good at web scraping – don’t get discouraged if your first attempts aren’t perfect! Just keep practicing, and eventually you’ll be able to extract valuable data from even the most challenging websites.

Web Crawling

Crawling is an important tool for researchers because it allows them to collect data from a large number of sources. By spidering specific websites or by using automated crawlers, researchers can collect data from all the pages on those websites. This information can then be used to create reports or databases that contain information on a variety of topics. Search engine scanning is also useful for collecting data from individual websites. By submitting different search queries to different search engines, researchers can retrieve results for those queries that are relevant to their research project. This information can then be used to create reports or databases that contain information on a variety of topics.

There are several software programs that can help automate these processes, making them more time-efficient. For example, Google Web Crawler was designed specifically for crawling the internet and it has been widely used by researchers since its release in 2004. In addition, there are several other free software programs available that can also be used for web crawling purposes.

Conclusion

Web scraping can be a great way to gather data from websites for your own purposes. In this article, we have got the basics idea of web scraping with Python and provide you with a few tips and tricks to help make the process easier. By the end of this guide, you should be able to extract data from websites using either Selenium or Scrapy. So whether you’re looking for ways to improve your blog content or just want to get a little more out of the internet, learning how to scrape web pages is an excellent step in that direction. Thanks for reading!